AI Programming Tutorials

Local RAG Cookbook (GitHub Repo)

You can build a sophisticated and powerful RAG system that runs on your hardware using Ollama, pgvector, and local data.

Hacker News Ask HN: Daily practices for building AI/ML skills? including this

I assume you’re talking about the latest advances and not just regression and PAC learning fundamentals. I don’t recommend following a linear path - there’s too many rabbit holes. Do 2 things - a course and a small course project. Keep it time bound and aim to finish no matter what. Do not dabble outside of this for a few weeks :)

Then find an interesting area of research, find their github and run that code. Find a way to improve it and/or use it in an app

Some ideas.

do the fast.ai course (https://www.fast.ai/)

read karpathy’s blog posts about how transformers/llms work (https://lilianweng.github.io/posts/2023-01-27-the-transforme… for an update)

stanford cs231n on vision basics(https://cs231n.github.io/)

cs234 language models (https://stanford-cs324.github.io/winter2022/)

Now, find a project you’d like to do.

eg: https://dangeng.github.io/visual_anagrams/

or any of the ones that are posted to hn every day.

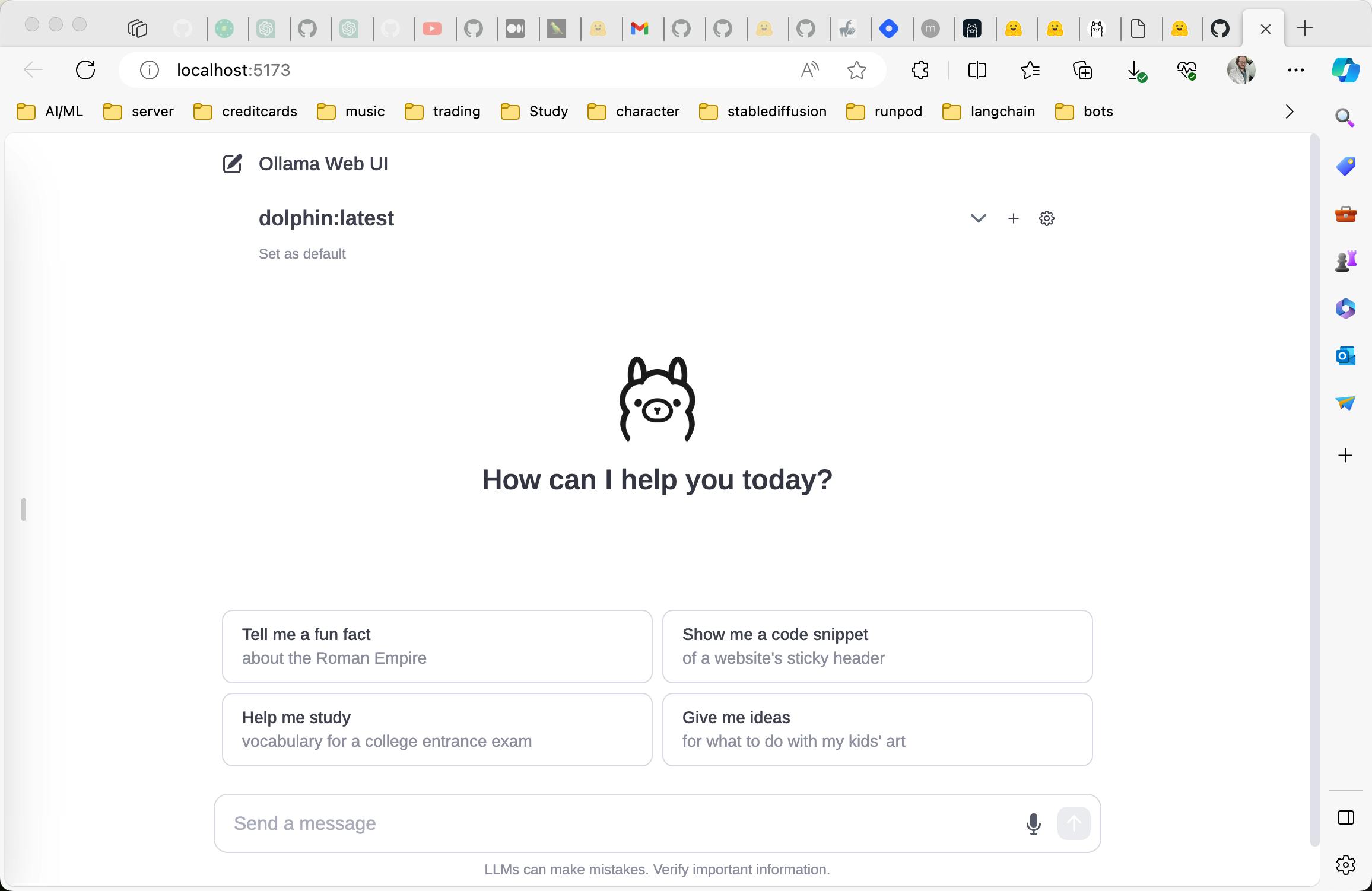

Eric Hartford describes step-by-step how to run locally an Oolama-based set of LLM models for conversation and chat. Sizes start at 3 GB with RAM at 6 GB+.

Tony Sun’ Deep dive into Intro to Real-Time Machine Learning: lengthy description for data scientists and machine learning engineers who want to gain a better understanding of the underlying data pipelines to serve features for real-time prediction

Simon Willison (@simonw) explains in great detail How to use Code Interpreter for an extensive Python task. Also see his podcast transcript where he goes into more detail about “Code Interpreter as a weird kind of intern”.

ChatGPT Plugins

Tutorials and Courses

Fine-tuning GPT with OpenAI, Next.js and Vercel AI SDK from Vercel, a step-by-step guide to building a specialized model for a specific task (e.g. Shakespeare).

A Github list of good sources to learn ML/LLM stuff.

Focus on the speed at which you can run valid experiments, it is the only way to find a viable model for your problem.

Andrej Karpathy’s Intro to Large Language Models is a one-hour lecture intended for complete beginners. Also see his two-hour Youtube: Let’s build GPT: from scratch, in code, spelled out. part of his Neural Networks: Zero to Hero playlist.

Getting Started with Transformers and GPT

Stanford CS324 Understanding LLMs

Recommended Videos

From Github:@krishnaik06

3. Basic Deep Learning Concepts (Day 1- Day 5)

- ANN - Working Of MultiLayered Neural Network

- Forward Propogation, Backward Propogation

- Activation Functions, Loss Functions

- Optimizers

4. Advanced NLP Concepts (Day 6 - Last Video)

- RNN, LSTM RNN

- GRU RNN

- Bidirection LSTM RNN

- Encoder Decoder, Attention is all you need ,Seq to Seq

- Transformers

5. Starting the Journey Towards Generative AI (GPT4,Mistral 7B, LLAMA, Hugging Face Open Source LLM Models,Google Palm Model)

5. Vector Databases And Vector Stores

- ChromaDB

- FAISS vector database, which makes use of the Facebook AI Similarity Search (FAISS) library

- LanceDB vector database based on the Lance data format

- Cassandra DB For storing Vectors

6. Deployment Of LLM Projects

- AWS

- Azure

- LangSmith

- LangServe

- HuggingFace Spaces